Imagine a world where your every sentiment, every email, every social media post, every meeting minute and every line of code you write was then condensed down to create a pseudo “clone” of yourself. It sounds both horrifying and amazingly beautiful too.

Now imagine your ideas, comments and thoughts being used against you or your company as a weapon for manipulation….

“An object cannot make you good or evil. The temptation of power, forbidden knowledge, even the desire to do good can lead some down that path. But only you can change yourself.” — Bendu

Your company’s financial filing, every SEC document, every judicial hearing, every speech, every email, every text, every chat communication being condensed down to train an AI model to create a version simulating you, your family, your employees, your board of directors and our public figures.

Is this a gift, or a curse?

Unfortunately, we live in a world where everyone’s competing, trying to predict their opponent’s next moves and get the strategic advantage… So, maybe it’s not so difficult to believe in a sci-fi reality where a LLM could be weaponized to predict your next speech, next oral argument, next presentation, ideas, lines of code or cyber security vulnerability exploit, game cheat and hack

These same inventions that can be used to capture our genius and automate our creativity by multiples, can sadly be used against us too. Maybe they already are …

If we’ve learned anything from game theory, is once a new strategy is introduced to the game, opponents will use them against each other in escalatory fashion at the fear they lose if they don’t.

As I lie awake at night, fixated on the reality that looms ahead, I can’t help but wonder how easily might all this be? Is it commercially viable to clone your mind? Are there any barriers to entry for a novice?

And the problem is, once I go down the rabbit hole, like Alice, I tend to find myself hopelessly and delightfully lost in some never-ending journey that may ultimately lead to my own undoing. Each question opens up a dozen more, each answer pulls me deeper, blurring the line between curiosity and obsession.

“When you look at the dark side, careful you must, for the dark side looks back” – Yoda

So, if you read no further then this opening section, know this.

Yes, for a few hundred to thousand dollars and a few weeks of opposition research against a competitor, a novice can use commercially available low-code/no-code technology to tune AI models to “clone” Steve Jobs, Donald Trump, Judge So-and-So, your local politician or maybe even get into the mind of a hacker or a killer. And if a novice can do this with commercial tools and without a barrier to entry, imagine what a well funded enterprise is doing?

So it’s with great reluctance, that I explore this technology, because the power to catch a killer, prevent a hacker, catch a cheater and discuss morality with past philosophers can help shine a light on the darkness.

As I grapple with my own moral code given the security, privacy and threats of this emerging technology, I equally find it just as wrong to share this knowledge as much as I disagree with hiding it. And so, I chose light over darkness, good over evil, transparency over secrecy with the truest belief that good will prevail in AI.

Why care if AI can Predict Your Opponent’s Next …

Maybe a political candidate is preparing for a debate against you …

Maybe your a major publicly traded company, preparing for questions and answers for Congress …

Maybe your a lawyer looking to understand your opponents or the judge’s …

Maybe your a cyber security company looking to predict a hacker or cheater’s next …

Maybe your a nation, preparing for a foreign diplomats next …

Maybe your law enforcement trying to understand a killer or a school shooter’s next ….

And more concerning, it might be the other way around.

Your opponent may already be training and tuning a model to predict what you are going to say, write or plans you might make in the future. Creating a metaphorical copy of your brain to predict your words and speech.

While everyone is focused on how their companies can leverage LLM to amplify productivity by creating models that can perform human tasks, I’m more concerned about how these technologies can be abused to exploit humanity.

What LLMs can and Can’t Do

Before, we get too hyperbolic painting a picture of the impending AI apocalypse. Let’s start with understanding the basics of how a LLM like GPT work.

Models

LLMs: Make predictions based on statistical patterns learned from data, using powerful algorithms that recognize and weigh word relationships. Their predictions are mechanical, pattern-driven, and non-intuitive.

LLMs transform each word into a vector, or a set of numbers that represents its meaning in a high-dimensional space. This process is done through word embeddings (e.g., Word2Vec, GloVe, or more advanced embeddings like those from BERT and GPT). These embeddings allow the model to understand words based on their relationships and similarities to other words.

Mikolov et al. (2013). “Efficient Estimation of Word Representations in Vector Space”

Using an attention mechanism, the model can “attend” to relevant words in a sequence and weigh them accordingly. This way, it focuses on important words (like “cat” and “chased” in “The cat chased the _“) when predicting the next word. Attention mechanisms are essential for capturing dependencies between words, especially in long sentences.

Vaswani et al. (2017). “Attention is All You Need”

The model calculates the probability of each possible next word using a softmax function, which transforms raw scores into probabilities that sum up to 1. This step ensures that the model picks words based on likelihood, but with flexibility to vary its choices slightly.

Goodfellow et al. (2016). Deep Learning, Chapter 6 (on softmax and probabilistic methods)

Humans

Humans: Rely on a deeper understanding of meaning, intention, and experience, considering context, emotions like empathy and anger, and personal knowledge. Human predictions are shaped by a combination of reasoning, intuition, social interactions, psychoactive and chemical responses.

Instead, trained with enough textual based data from an opponent, one might infer an opponent’s words or actions, based on possible text or language generated by a LLM but the LLM is not predicting human behavior unless the human’s job is largely based on speaking, writing etc. etc.

Certainly, I’m not suggesting society to devise a Minority Report Precogs form of AI used to arrest criminals before they commit crimes. This type of weaponization would be riddled with morality questions.

Although, understanding your opponents next words or written works might compliment other behavioral AI. For example, for jobs and roles that are largely language based such as public speaking and writing code, there may be an ethical and practical strategic value in understanding your competitor’s next …

If you interested in more advance behavioral AI modeling and training to predict a competitors behavior then LLM are not the correct tool, instead I recently learned of the following models, that I might delve into into the near future.

Reinforcement Learning (RL): Widely used to predict human actions in sequential decision-making environments. RL models excel in environments with clear goals and feedback, where they can mimic human decision patterns through exploration and reward maximization. Recent advances like Deep Q-Networks (DQN) and Dueling DQNs have made these models more stable and effective in complex scenarios

https://medium.com/@old.noisy.speaker/reinforcement-learning-with-deep-q-networks-dqn-d56990c78179

Imitation Learning and Behavioral Cloning are highly suitable for predicting actions when there is access to human demonstrations. These models learn from recorded actions and then replicate or generalize them. Inverse Reinforcement Learning (IRL), a type of imitation learning, goes further by learning the underlying reward function driving human actions, making it particularly powerful for complex, real-world behavior predictions like driving or robotic manipulation

https://smartlabai.medium.com/a-brief-overview-of-imitation-learning-8a8a75c44a9c

Game Theoretic Models and Multi-Agent Reinforcement Learning (MARL) help predict actions in environments where multiple entities (humans or AI) interact strategically, such as in economics or cybersecurity. These models are grounded in the strategic decision-making principles of game theory, enabling them to forecast competitive or cooperative human behaviors

https://ar5iv.org/pdf/2209.14940

tuning commercially trained models

For those without the means to collect, store and train a new model completely, there are emerging open source and commercially available models exist today that can be used as a starting point.

These models can be “tuned” to fit your needs and customized with your own ethical guard rails.

To put it in perspective, the Falcon 40B LLM model would require hundreds of petaflop/s-days (a measure of computational work). And to compare to models most people use day-to-day:

- GPT-3, which was trained on a similar scale of data (570GB of filtered text, 300 billion tokens), reportedly required around 314 Zettaflops of computation (1 Zettaflop = 1,000 Petaflops).

- Falcon 40B, trained on 1 trillion tokens, would have required a comparable, if not greater, amount of compute power, potentially ranging from tens to hundreds of petaflop/s-days depending on the specific hardware and optimization techniques used.

Most Cloud providers offer pre-trained models to us for further tuning removing the barrier to entry making exploring our use cases feasible.

Let’s talk about what is within the means of most small to medium sized busines.

commerical cloud offering for POC

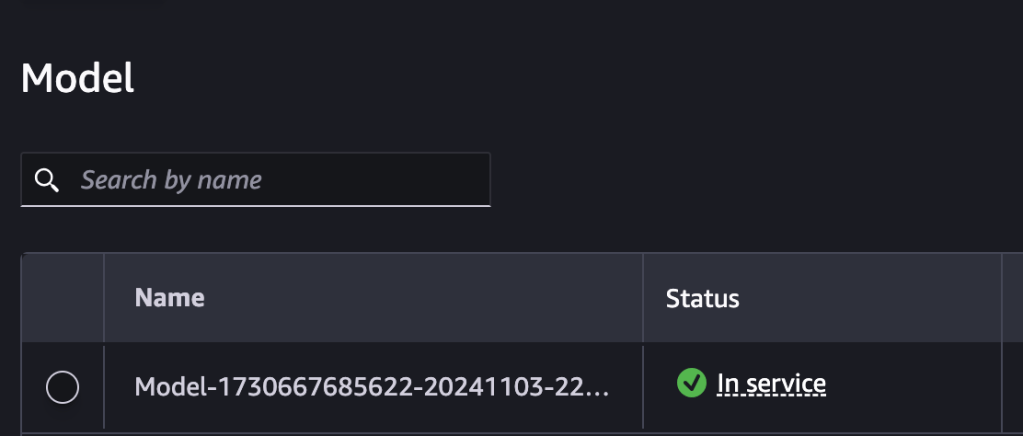

In our proof of concept, we can use the AWS SageMaker. SageMaker supports out-of-the-box large language models (LLMs) through Amazon Bedrock, which integrates pre-trained foundation models from various providers.

A similar solution might be used using the Google PaLM 2 (LLM), offered through Vertex AI GCP product.

Let’s build on AWS SageMaker, some of the prominent LLMs supported on AWS SageMaker include:

- Falcon LLM

Description: Falcon is a state-of-the-art, open-weight model developed by the Technology Innovation Institute (TII) that excels at NLP tasks like text generation and summarization.

Size: Models like Falcon 7B and Falcon 40B are available.

Use Cases: Fine-tuned for text classification, summarization, and conversational AI. - Claude (Anthropic)

Description: Claude, developed by Anthropic, is designed to prioritize safety and ethical AI use, making it ideal for business use cases like customer service or content generation.

Use Cases: Natural language understanding, summarization, and human-like conversation. - Jurassic-2 (AI21 Labs)

Description: Jurassic-2 models are capable of handling multi-lingual tasks and generating high-quality text across various domains, including creative writing, customer support, and general knowledge tasks.

Use Cases: Text generation, translation, question answering. - Amazon Titan

Description: Amazon Titan models are proprietary large language models developed by AWS, designed for tasks like text generation, extraction, and summarization. These models can be used out of the box or fine-tuned using SageMaker.

Use Cases: Document analysis, sentiment analysis, and content creation. - Mistral (Available through Hugging Face integration)

Description: Mistral is available via SageMaker’s integration with Hugging Face, providing efficient, compact models for text processing and generation tasks.

Use Cases: Lightweight NLP tasks where efficiency is crucial. - OPT (Meta)

Description: Meta’s Open Pretrained Transformer (OPT) models are accessible through SageMaker and are optimized for performing NLP tasks at scale, with a strong focus on computational efficiency.

Use Cases: Text generation, translation, and summarization.

Key Features of AWS SageMaker with LLMs:

Amazon Bedrock: Provides easy access to foundation models without requiring infrastructure management.

The Choice of LLM

For my use case, I want to provide domain specific instructions with the ability to follow procedural tasks and generate code. In my line of work, cybersecurity, I want to tune a model based on opposition research of the attackers to simulate their coding and exploit styles.

For your use case, you may require a model that behaves differently.

The huggingface-llm-falcon-40b-instruct-bf16 model offers several benefits for what I’m trying to achieve.

- Enhanced Instruction Following

This model is tuned specifically for instruction-following tasks, making it particularly useful for applications that require precise and context-aware responses to user commands or questions. It can effectively follow complex instructions. - Improved Performance with BF16 Precision

The BF16 (Bfloat16) format optimizes the model’s efficiency by using less memory and increasing computational throughput without sacrificing significant accuracy. - High-Quality Language Generation with 40 Billion Parameters

With 40 billion parameters, the Falcon-40B is ideal for applications that demand nuanced and sophisticated language generation like writing code. It excels in producing contextual responses, which is advantageous in fields such as chatbots, content generation, and automated technical support. - Large Contextual Understanding

The model’s training allows it to understand and respond to a broad range of topics, including technical, conversational, and domain-specific language. This is valuable for applications needing deep context comprehension, such as technical documentation, educational tools, and knowledge-based chat systems. - Suitable for a Variety of NLP Applications

The huggingface-llm-falcon-40b-instruct-bf16 model is versatile, suitable for tasks like summarization, text completion, content creation, and code generation.

For those new to these concepts, the important takeaway is that not every LLM model is the same. Some do better with dynamic text generation, while other may do better with procedural tasks.

| Model | Best For | Not Ideal For |

|---|

| Falcon (e.g., Falcon-7B, Falcon-40B) | – Instruction-following tasks (with instruct variants) – Code generation, technical Q&A – Fine-tuning for domain-specific applications | – Real-time, lightweight applications – Some conversational applications (limited on informal conversational data) |

| GPT-J (6B) | – Moderate-context applications (e.g., general Q&A, document summarization) – Educational tools, content generation | – Complex or highly specific technical tasks – Real-time applications requiring rapid inference |

| GPT-NeoX (20B) | – Content creation, research analysis – Deep technical knowledge applications (e.g., scientific, technical Q&A) | – Low-resource environments – Real-time applications due to latency from large parameter size |

| Llama 2 (7B, 13B, 70B) | – Chatbots and interactive applications – Instructional and conversational tasks – Customer support, healthcare, education | – Small-scale, real-time applications (especially in larger sizes) – Use cases requiring unrestricted deployment (check license for commercial use) |

| Bloom (e.g., Bloom-176B) | – Multilingual support and text generation – Large-scale content creation – Government or research applications with diverse language needs | – Lightweight or small-scale applications – Real-time response scenarios due to latency |

the logical design

We’ve covered what LLM can and can’t be used for and we’ve covered that there are limitations to those without the means to train a new model from scratch and we’ve identified commercially available products that are suitable for building a proof of concept to mimics our competitors words and text …

In my line of work, I often think of hackers playing a cat and mouse game between companies releasing code and hackers trying to subvert it.

Your game may be different in your industry. For illustration, we’ll building a logical diagram with the theoretical scenario of trying to understand what an security exploit an attacker might write next. Basically, I want to train the model to be respond like more hacker based on the past activity and content a hacker has generated.

Collecting Data

First step in tuning the model using AWS is collecting enough data to properly tune the model to behave in a manner that aligns to your opponent’s next words or lines of code.

In this case we can scrape data available on forums such as Discord, Reddit, Tor Dark Web, YouTube close Captions and Gitlab for inline comments.

The quality of the model tuning will be solely based on the quality of question/answer or prompt:response data you supply the model. In other words, the closer you want to “clone” an opponents brain the more content we need from the opponent.

Typically, the advice is to tune you model models and thousands of tailored questions and answers. For the PoC these public sources may be acceptable, but be aware that if the public sources are spam and poisoned with fake information then the quality of the tuning data is poor, and the LLM will not behave as expected.

For example, you might scrape Youtube transcript close captions …

Psuedo Code – Youtube Scraper

INFO: Python libraries are written by open source communty members, always download the archived file and review content before using their libraries.

# Install the library first with:

# pip install youtube-transcript-api

from youtube_transcript_api import YouTubeTranscriptApi

def get_youtube_captions(video_id, output_file):

try:

# Fetch the transcript

transcript = YouTubeTranscriptApi.get_transcript(video_id)

# Open a file to save the captions

with open(output_file, 'w') as f:

for entry in transcript:

# Write each caption to the file

f.write(f"{entry['start']} - {entry['start'] + entry['duration']}: {entry['text']}\n")

print(f"Captions saved to {output_file}")

except Exception as e:

print(f"Error: {e}")

# Replace 'YOUR_VIDEO_ID' with the actual YouTube video ID

get_youtube_captions('YOUR_VIDEO_ID', 'captions.txt')Or maybe we want to query reddit for topic names that include common keys words that interest you in your industry?

Psuedo Code – Reddit Scrape

import praw

import csv

import re

# Initialize Reddit API client

reddit = praw.Reddit(

client_id="YOUR_CLIENT_ID",

client_secret="YOUR_CLIENT_SECRET",

user_agent="YOUR_USER_AGENT",

username="YOUR_USERNAME",

password="YOUR_PASSWORD"

)

# Keywords to search for in subreddit names

keywords = ["hacks", "cheats", "exploits"]

# Function to check if a subreddit name contains any of the keywords

def contains_keyword(subreddit_name):

return any(keyword in subreddit_name.lower() for keyword in keywords)

# Open the CSV file to write data

with open('reddit_filtered_comments.csv', 'w', newline='', encoding='utf-8') as csvfile:

csvwriter = csv.writer(csvfile)

# Write the header

csvwriter.writerow(['Subreddit', 'Comment'])

# Fetch all subreddits containing keywords in the name

for subreddit in reddit.subreddits.default(limit=50): # You can modify the limit

if contains_keyword(subreddit.display_name):

print(f"Subreddit found: {subreddit.display_name}")

# Fetch top posts from the filtered subreddit

for submission in subreddit.hot(limit=10): # You can modify the post limit

submission.comments.replace_more(limit=0)

for comment in submission.comments.list():

# Write subreddit name and comment body to CSV

csvwriter.writerow([subreddit.display_name, comment.body])

print(f"Subreddit: {subreddit.display_name}, Comment: {comment.body}")

print("Data has been written to reddit_filtered_comments.csv")Or maybe you have access to you opponents written text or work products, such as malware written by an attacker or reverse engineer compiled code in some evil program and you can scrape inline comments from code in order to build a profile on the common language usage and design patterns and exploit methods used for a particular developer or APT?

Then maybe you can simply find their inline comments and save the number of lines of code and profile and build a profile based on them.

Psuedo Code – Github Scraper

import requests

import csv

import re

# Function to escape special characters

def escape_special_characters(code):

return code.replace("\n", "\\n").replace("\t", "\\t").replace('"', '\\"').replace("'", "\\'")

# Function to read a raw file from a GitHub repository

def fetch_github_raw_file(url):

response = requests.get(url)

if response.status_code == 200:

return response.text

else:

raise Exception(f"Failed to fetch the file from {url}")

# Function to find inline comments and the next 20 lines of code

def extract_comments_and_code(content):

lines = content.splitlines()

pattern = re.compile(r'(#|//|/\*.*\*/|/\*|--|<!--)')

results = []

i = 0

while i < len(lines):

line = lines[i]

# If the line contains an inline comment

if pattern.search(line):

comment = line.strip()

next_lines = lines[i + 1:i + 21] # Get the next 20 lines of code

next_code = "\n".join(next_lines).strip()

next_code = escape_special_characters(next_code) # Escape special characters

results.append((comment, next_code))

i += 20 # Skip the next 20 lines to avoid redundant processing

i += 1

return results

# Function to write the results to a CSV file

def write_to_csv(results, output_filename):

with open(output_filename, 'w', newline='', encoding='utf-8') as csvfile:

csvwriter = csv.writer(csvfile)

# Write the header

csvwriter.writerow(['Comment', 'Next 20 Lines of Code'])

# Write the rows

for comment, code in results:

csvwriter.writerow([comment, code])

# GitHub raw file URL (replace this with the actual URL of the raw file you want to process)

github_raw_url = "https://raw.githubusercontent.com/username/repo/main/file.py" # Change to your target file

# Output CSV filename

output_csv = "comments_and_code.csv"

try:

# Fetch the raw file content

file_content = fetch_github_raw_file(github_raw_url)

# Extract comments and the next 20 lines of code

comments_and_code = extract_comments_and_code(file_content)

# Write the extracted data to a CSV file

write_to_csv(comments_and_code, output_csv)

print(f"Data has been written to {output_csv}")

except Exception as e:

print(f"Error: {e}")

Whatever the case, the theory is that we will build a large enough tuning data set that compromises thousands of questions and responses in an acceptable format such that AWS can tune the model further.

The better the quality of your research, the better the quality the training and tuning of the LLM.

tuning the model

For our specific PoC case we need to tune a huggingface-llm-falcon-40b-instruct-bf16 model to predict a Hacker’s various social posts, exploit code and text, the structure of the training set might look like this:

The “huggingface-llm-falcon-40b-instruct-bf16” model is a 40-billion-parameter large language model developed by the Technology Innovation Institute (TII) in the United Arab Emirates. This model is a fine-tuned version of the base Falcon-40B model, optimized for instruction-following tasks, making it particularly suitable for applications like chatbots and virtual assistants.

The model is available under the Apache 2.0 license, which permits both personal and commercial use without significant restrictions.

https://huggingface.co/models

AWS DOCUMENTATION

https://docs.aws.amazon.com/sagemaker/latest/dg/autopilot-llms-finetuning-data-format.html

| instruction | context | response |

|---|---|---|

# Example exploit: how to write a buffer overflow exploit in python? | a user is asking, what would an example code in the python programming language that can overwrite the buffer to that a running programs memory can be modified or pointers can be modified | {Exploit Code} |

How to overwrite memory to change a games score? | { | |

| What would a script written in C look like to run a DLL without being detected by an anti-virus? | {Malware Code} | |

How to write an exploit as a kernel driver? | { | |

| What are common approaches hackers use to avoid being caught by antivirus? | General Answer |

Columns:

- Prompt: This contains the input text (e.g., code snippets, comments, cheat examples, or exploit examples).

- Response: This is the label or expected output based on the input prompt.

- For binary classification, this could be

"Exploit"vs"Legitimate"or"Cheat"vs"Normal". - For multiclass classification, this could have multiple labels like

"SQL Injection","Buffer Overflow","Cheat", etc.

- For binary classification, this could be

Processes Running on AWS Training Instances

For most people, you may not care about the interworking of the the SageMaker solution. However, if you are at all interested in some of the internals that are being hidden behind the curtains, you may want to dig deeper into the following introductory concepts.

- Training Script: SageMaker launches a training script, provided by Hugging Face Transformers or custom scripts, to handle data loading, forward passes, backpropagation, and optimization steps. This script coordinates the entire tuning workflow.

- Deep Learning Frameworks: Frameworks like PyTorch or TensorFlow are usually running on the instances, handling the heavy computation required for neural networks. These frameworks facilitate matrix operations and GPU parallelization, which are essential for tuning large models.

- Distributed Training Libraries: For large-scale models like Falcon-40B, distributed training libraries like Horovod or DeepSpeed may be used to split model parameters and data across multiple GPUs or nodes. This enables data parallelism (same model across different data) and model parallelism (splitting model layers across devices).

- Optimizers: Optimizers like AdamW or SGD are active, adjusting model weights during backpropagation based on gradients computed from the training loss.

Programs and Frameworks Facilitating Model Tuning

When you tune the model in SageMaker, various programs run on a training server on your behalf. More technical people may build these instances themselves, however recent low-code now-code solutions have made the ability to automate training more available to the laymen and novice.

- Data Loading and Processing: Programs for handling data preprocessing, such as tokenization, batching, and loading into the model, are crucial. The Hugging Face Transformers library or SageMaker data utilities often manage this step.

- Checkpointing and Logging: Programs manage checkpointing (saving model states) and logging for tracking performance metrics. TensorBoard or SageMaker’s built-in logging tools are typically used to visualize loss, accuracy, and other metrics.

What Happens During Model Tuning

Model tuning on a large language model, like Falcon-40B, involves adjusting the parameters of the model through a process called gradient-based optimization. Here’s what happens mathematically:

- Forward Pass: In each training step, the model takes a batch of input data (e.g., text samples) and passes it through its layers. Each layer computes a set of transformations, often represented as matrix multiplications, to generate an output prediction.

- For a language model, this prediction may be the next token in a sequence, and the output of the forward pass is a probability distribution over possible next tokens.

- Loss Calculation: The model’s predictions are compared to the true labels (actual next tokens or desired responses) to calculate the loss. The loss is a mathematical measure of how far off the model’s predictions are from the true labels.

- Common loss functions for LLMs include cross-entropy loss, which quantifies the difference between the predicted probability distribution and the true distribution of tokens.

- Backward Pass (Backpropagation): after computing the loss, the training script initiates backpropagation. This is where the gradients of the loss with respect to each of the model’s parameters (weights) are calculated.

- These gradients tell the optimizer the direction in which each parameter should change to reduce the loss. The backpropagation algorithm calculates gradients efficiently through automatic differentiation techniques provided by deep learning frameworks.

- Gradient Descent Optimization: The optimizer, such as AdamW, uses the gradients to adjust the parameters in a way that minimizes the loss.

- Regularization Techniques: Large models are prone to overfitting due to their high capacity. Techniques like dropout and weight decay are applied to prevent overfitting.

If all that sounds confusing, that’s okay. I thought so too.

Remember, there are plenty of amazing mechanics who don’t understand the calculus based physics and the laws of themal dynamics.

Don’t be distracted by all the mathematical lingo and cyber security lingo. Basically, AWS SAgeMaker will use AI/ML specific software that runs on a training server that iterates over the tuning data we feed the model and then the server will build a bunch of “prediction weights” that instruct how to predict the next word or text.

Then each prediction is analyzed for accuracy and the software, measures how close or far off the prediction was, then the software tinkers with the weights until it finds an optimal set of weights to get the closest prediction. After this process, you have some files that are essentially the instructions for the model’s predictions.

For the novice, you provide nothing more than a text file with three things. Those three things are

- Common questions

- Context to better train the model based on word and pattern associations

- Common answers that are found when we compile our data based on on competitors responses.

The model and various helper programs run on the training computer to allow the model to improve it’s ability to predict the next character that is often used based on the input it receives. So all we need is good input data and to understand how to feed it into the training instance.

Common errors in the tuning-data

You may experience an error such as below if the correct columns and data structured are not used when feeding the training.

AlgorithmError: ExecuteUserScriptError: ExitCode 1 ErrorMessage "raise ValueError( ValueError: Part or all of input keys in the prompt template are found missing in the column names of each example.Input keys are ['instruction', 'context', 'response']Another issue you may run into the model may be too large. The hugging face model is both a large and known to be resource intensive model. For myself, I downloaded all the files and repackaged them into an archive and re-uploaded the archive into AW S3 to make deployment easier. you may run into similar issues with scaling and limits of the file size and sharding.

secsandman@rickys-MBP-2 model-files % tar -czvf model.tar.gz *

a model

a model/model-00001-of-00018.safetensors

a model/model-00004-of-00018.safetensors

a model/checkpoint-500

a model/model-00016-of-00018.safetensors

a model/tokenizer_config.json

a model/special_tokens_map.json

a model/model-00013-of-00018.safetensors

a model/config.json

a model/configuration_RW.py

a model/tokenizer.json

a model/model-00005-of-00018.safetensors

a model/model-00017-of-00018.safetensors

a model/model-00012-of-00018.safetensors

a model/train_results.json

a model/generation_config.json

a model/model-00018-of-00018.safetensors

a model/model-00011-of-00018.safetensors

a model/model-00014-of-00018.safetensors

a model/model-00006-of-00018.safetensors

a model/eval_results.json

a model/model-00003-of-00018.safetensors

a model/model-00009-of-00018.safetensors

a model/model-00010-of-00018.safetensors

a model/model-00015-of-00018.safetensors

a model/all_results.json

a model/modelling_RW.py

a model/runs

a model/model.safetensors.index.json

a model/model-00008-of-00018.safetensors

a model/model-00007-of-00018.safetensors

a model/trainer_state.json

a model/model-00002-of-00018.safetensors

a model/runs/Oct28_02-44-33_algo-1

a model/runs/Oct28_02-44-33_algo-1/events.out.tfevents.1730083550.algo-1.124.0

a model/runs/Oct28_02-44-33_algo-1/events.out.tfevents.1730088844.algo-1.124.1

a model/checkpoint-500/adapter_model.safetensors

a model/checkpoint-500/rng_state.pth

a model/checkpoint-500/tokenizer_config.json

a model/checkpoint-500/special_tokens_map.json

a model/checkpoint-500/optimizer.pt

a model/checkpoint-500/scheduler.pt

a model/checkpoint-500/tokenizer.json

a model/checkpoint-500/adapter_model

a model/checkpoint-500/README.md

a model/checkpoint-500/training_args.bin

a model/checkpoint-500/adapter_config.json

a model/checkpoint-500/trainer_state.json

a model/checkpoint-500/adapter_model/adapter_model

a model/checkpoint-500/adapter_model/adapter_model/adapter_model.safetensors

a model/checkpoint-500/adapter_model/adapter_model/README.md

a model/checkpoint-500/adapter_model/adapter_model/adapter_config.json

secsandman@rickys-MBP-2 model-files %

secsandman@rickys-MBP-2 model-files % ls

model model.tar.gz

secsandman@rickys-MBP-2 model-files % aws s3 cp model.tar.gz s3://sagemaker-us-east-1-789975878700/jumpstart-dft-huggingface-llm-falco-20241028-014028/output/model/

Completed 3.2 GiB/76.8 GiB (2.0 MiB/s) with 1 file(s) remaining

upload: ./model.tar.gz to s3://sagemaker-us-east-1-789975878700/jumpstart-dft-huggingface-llm-falco-20241028-014028/output/model/model.tar.gzFile Requirements for SageMaker Autopilot Tuning

File Format:

- The accepted file format for training data is typically CSV. SageMaker Autopilot expects a CSV file where each row represents a single record, with columns for different features (including the target variable).

- Each file should have a header row defining column names.

- Record Size:

Maximum record size: Each record should generally be less than 2 MB. Larger records can impact processing and lead to potential failures during training.

Minimum number of records: There isn’t a strict minimum, but for effective training and model tuning, having several thousand records is recommended.

Total Dataset Size:

The total dataset should ideally be below 5 GB for SageMaker Autopilot, as larger datasets can slow down processing and increase costs.

Single-file upload: For most Autopilot training jobs, a single CSV file is recommended for ease of use and optimal performance.

Multiple files: While possible, multiple files can lead to inconsistent splits, so concatenating data into a single CSV is typically preferred.

Fine-tuning (for a specific task): According to examples on AWS, fine-tuning on a prepared dataset (e.g., a few hundred thousand to a million examples) can take 1-3 days on 8-16 p4d.24xlarge instances. This time can vary significantly based on the dataset size, specific tuning parameters, and infrastructure setup.

Be aware, that simply fine-tuning (not training) an LLM model in AWS can take multiple days and costs hundreds if not thousands of dollars to tune. If your planning on attempting this putting your own personal funds and cloud account, be prepared to invest a few hours to a few days and a few hundred dollars. .

Deploying The Model

After you tune your model, generate your artifacts and upload a a packaged archive of the artifacts to S3, you must then register and deploy your model to an endpoint. The Falco40b is very large, in my use-case around 70GB-80GB.

You must increase your AWS limits to allow an adequate sized endpoint instance to run the model in memory. For example, an instance with only 80 GB memory will likely fail to deploy.

Upload model.tar.gz to S3 (if not already done)

If you haven’t already uploaded the model.tar.gz file to S3, you can do so with:

bashCopy codeaws s3 cp model.tar.gz s3://sagemaker-us-east-1-<foobar>/path-to-model/

This will store model.tar.gz in S3 with the path s3://sagemaker-us-east-1-<foobar>/path-to-model/model.tar.gz.

Register the Model in SageMaker

- Navigate to the SageMaker Console:

- Go to the Model Registry:

- On the left sidebar, choose Model Registry –> select Register model.

- Specify Model Information:

- Model Package Group: Choose an existing model package group or create a new one.

- Model Approval Status: Set to

PendingManualApprovalif you want to review it before deployment, orApprovedto make it immediately deployable.

- Define the Inference Specification:

- In the Containers section, you’ll need to specify:

- Container Image: Choose the container image to use for inference (e.g.,

763104351884.dkr.ecr.us-east-1.amazonaws.com/huggingface-pytorch-inference:2.0.0-transformers4.28.1-gpu-py310-cu118-ubuntu20.04if using Hugging Face). - ModelDataUrl: Enter the S3 path to your model file. For example:bashCopy code

s3://sagemaker-us-east-1-<foobar>/path-to-model/model.tar.gz

- Container Image: Choose the container image to use for inference (e.g.,

- In the Containers section, you’ll need to specify:

- Additional Information (Optional):

- Model Metrics: You can optionally add model metrics if they’re relevant.

- Model Card: Fill in any additional details about the model such as description, training details, etc.

- Review and Register the Model:

- Once all fields are populated, review the information, and then click Register model.

intro to Deploying the Registered Model

After registering the model, you can deploy it to a SageMaker endpoint. An endpoint is like the server where the model program will be hosted so you can interact with the model API. Think of this much like a web server or game server that you communicate with. Only in this case, the communication is the predictive responses made by the model.

- Go to Endpoints in the SageMaker console.

- Click Create endpoint, choose the registered model package, and set up the endpoint configuration (instance type, scaling, etc.).

- Deploy the endpoint, and once it’s active, you can use it for inference.

For a large model with multiple .safetensors files like I’ve described, I needed to select an instance with more GPU memory, CPU power, and storage.

GPU vs. CPU

- GPU Instances are typically necessary for large language models (LLMs) and deep learning models, as they provide faster processing for inference.

- Instance Types: AWS provides various instance types optimized for different workloads. For large models, SageMaker instances with NVIDIA GPUs are preferred.

AWS Recommended GPU Instances

| Instance Type | vCPUs | Memory | GPU Type | GPU Memory | Description |

|---|---|---|---|---|---|

ml.p4d.24xlarge | 96 | 1,152 GB | 8 x A100 | 320 GB total | Top-end instance for large, complex models |

ml.p4de.24xlarge | 96 | 1,152 GB | 8 x A100 80GB | 640 GB total | Enhanced A100 instance, ideal for massive models |

ml.g5.24xlarge | 96 | 384 GB | 4 x A10G | 96 GB total | Versatile for moderately large models and better cost |

ml.g5.12xlarge | 48 | 192 GB | 2 x A10G | 48 GB total | Good for slightly smaller models |

ml.g5.4xlarge | 16 | 64 GB | 1 x A10G | 24 GB | Suitable for smaller large models or testing |

Key Specifications to Consider

- GPU Memory: The model you described has multiple large

.safetensorsfiles, indicating high memory requirements. The A100 GPUs (especially with 80 GB versions) onml.p4dorml.p4deinstances provide ample memory. - CPU and vCPUs: The recommended instances come with a high CPU-to-GPU ratio, helping with pre- and post-processing tasks.

- Memory (RAM): Ensure enough RAM to load and manipulate large models in memory. Instances with at least 192 GB of RAM are preferred.

- Storage: If you’re working with temporary storage or caching, consider attaching an EBS volume with SSD storage of 500 GB or more for optimal loading times.

Instance Selection Based on Model Size

- For very large models (100 GB+ total size):

- Recommended:

ml.p4de.24xlarge(best) orml.p4d.24xlarge - These instances have the A100 80GB GPUs and sufficient memory to handle very large model deployments.

- Recommended:

- For moderately large models (10–50 GB):

- Recommended:

ml.g5.24xlargeorml.g5.12xlargefor a balance of cost and performance. - These instances have A10G GPUs, which are powerful but more cost-effective.

- Recommended:

Autoscaling and Multi-GPU Instances

- For production environments with fluctuating load, enable Autoscaling on multi-GPU instances (like

p4d.24xlarge), which allows for scaling based on usage patterns. - You may also deploy the model in a multi-model endpoint if running multiple versions of the model in parallel.

Interacting with our opponent AI

After the model has been trained, we want to deploy the model to an AWS model endpoint so we might be able to interact with our imaginary AI opponent.

SageMaker generates a model artifact. For non technical folks, think of this as your AI Application that needs to be installed and ran much like any other software, which contains the trained model parameters and configuration files needed for deployment.

Model Artifact Creation

- Once training or fine-tuning completes, SageMaker saves the model artifact in an S3 bucket. This artifact includes the model’s weights, configuration files, and any other necessary metadata.

- You can specify the S3 location for these artifacts during the training job setup, and SageMaker automatically stores them there once training is complete.

Model Deployment Options

We’ll need o ensure the model can interact with remote code, e.g listening on a service port and accept incoming requests. By default, the endpoint deployment will not accept incoming requests and expects the model interaction to be local machine.

Set Up the Model with trust_remote_code=True:

pythonCopy codeimport boto3

sagemaker_client = boto3.client("sagemaker", region_name="us-east-1")

# Define your SageMaker role and model data location

role = "arn:aws:iam::123456789012:role/service-role/AmazonSageMaker-ExecutionRole" # Replace with your role

model_data = "s3://your-bucket/path-to-your/model.tar.gz" # Replace with your model S3 path

image_uri = "763104351884.dkr.ecr.us-east-1.amazonaws.com/huggingface-pytorch-inference:2.0.0-transformers4.28.1-gpu-py310-cu118-ubuntu20.04"

# Create the model with `trust_remote_code=True`

response = sagemaker_client.create_model(

ModelName="my-hf-model",

PrimaryContainer={

"Image": image_uri,

"ModelDataUrl": model_data,

"Environment": {

"trust_remote_code": "true"

}

},

ExecutionRoleArn=role

)

print("Model created with trust_remote_code=True.")

Create an Endpoint Configuration and Deploy the Endpoint:

pythonCopy code# Create endpoint configurationml.g5.24xlarge

endpoint_config_name = "my-hf-model-endpoint-config"

sagemaker_client.create_endpoint_config(

EndpointConfigName=endpoint_config_name,

ProductionVariants=[

{

"VariantName": "AllTraffic",

"ModelName": "my-hf-model",

"InitialInstanceCount": 1,

"InstanceType": "" # Adjust instance size as needed

}

]

)

# Deploy the endpoint

endpoint_name = "my-hf-model-endpoint"

sagemaker_client.create_endpoint(

EndpointName=endpoint_name,

EndpointConfigName=endpoint_config_name

)

print("Endpoint deployed.")

Putting it all together

The notion that a novice can create a “clone” of oneself—a to make a digital mirror that encapsulates our thoughts, sentiments, and knowledge—is both exhilarating and alarming.

The potential for AI to emulate not only our ideas but even our intentions poses questions that stretch beyond technology into the realms of morality, security, and privacy.

While these tools can be harnessed to amplify human creativity, enhance productivity, and even aid in anticipating malicious acts, they can just as easily be wielded as weapons against us, turning our own thoughts and insights into tools of manipulation.

Imagine the strategic advantage: a political candidate forecasting their opponent’s every argument, a lawyer gauging a judge’s likely stance, or a corporation predicting a competitor’s moves. Yet, with this power comes a troubling vulnerability. When any individual or organization can harness readily available technology to “clone” the minds of others, the doors are open for exploitation.

As we forge ahead, the responsibility lies with all of us to navigate this technology wisely—appreciating its potential while remaining keenly aware of its risks. This is the threshold we stand upon, and the choices we make today will define how AI influences our world tomorrow.