A strategic look at adoption, exposure and what to do next …

Executive take

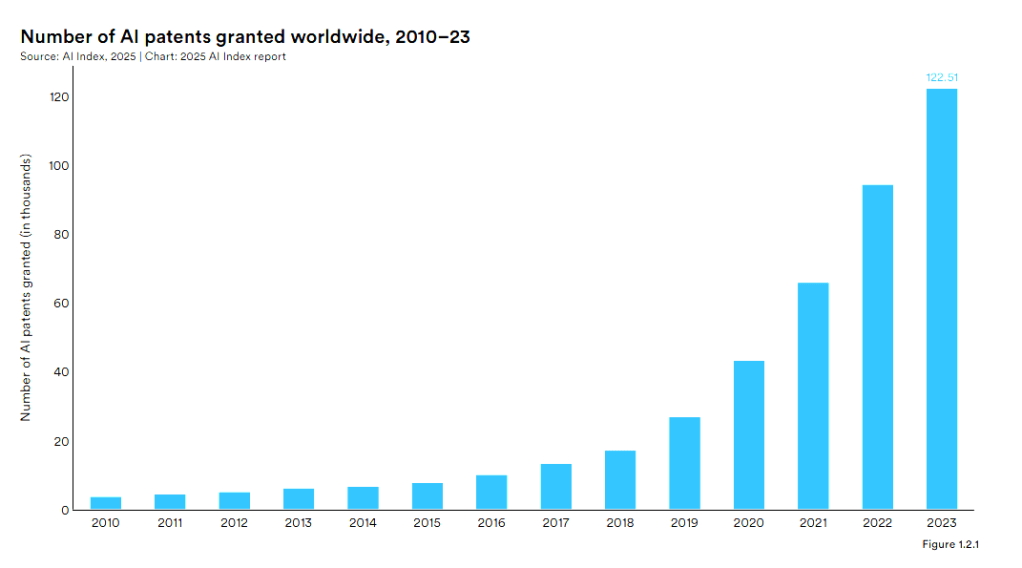

AI adoption is rising fastest in Software Engineering, Marketing/Sales, and Service/Customer Ops, while Finance/HR/Legal are catching up more cautiously. Globally, we’ve seen exponential innovation and patent filing of AI solutions.

Exponential AI Innovation = Growing Attack Surface

Source: Stanford “State of AI”

That uneven uptake across business units of AI combined with rapid product change creates an asymmetric cyber risk. The teams gaining the most productivity often introduce the broadest risk via tool actions, connectors and data exposure.

Meanwhile, the adversary “time-to-attack” is shrinking as attackers apply the same AI accelerants to reconnaissance, exploit iteration and social engineering.

Unless we realign controls around identity, data paths and tool actions, the attack surface will continue to expand faster than our mitigations.

- Source: McKinsey & Company

- Source: Stanford “State of AI”

Growing AI Security Capability Gap

What business trends are introducing cyber risk?

- Coding assistants & dev agents are now part of day-to-day engineering work. They touch source code, secrets, CI/CD, cloud APIs and they propagate introduce serious command and control situations.

- Productivity suites / Office copilots interleave with mailboxes, documents, chats, calendars. They summarize across repositories which is great for speed but bad when agent gets tricked into leaking or destroying your business data.

- Contact-center & service copilots ingest external conversations and knowledge bases, then act through CRM and ticketing—prime terrain for tool-abuse exfiltration if scopes aren’t minimal.

- “Autonomous”/agentic pilots exist, but at scale most value is still coming from assisted workflows. Risk still concentrates in connectors, actions, and data retention, not model weights.

Source: McKinsey & Company

This is a management problem disguised as a security issue.

- Unknown usage: Which AI tools are in play? Who’s using them? How much?

- Unknown data flow: What business data is entering prompts, logs and vendor clouds? Secrets, passwords, PII?

- Unknown authority: What can each agent actually do and with whose credentials?

Clicking a few toggles won’t fix it. “Zero-data retention” won’t either. It’s important to understand this is as much a human problem driven by a hunger for increased productivity while most AI tools still lack hardening, threat detections, SIEM-ready logs and coherent access control between agents and data.

The business risk story starts with privileged, multi-agent dev workstations wired to production via ad-hoc connectors—systems that are easy to trick yet powerful enough to cripple a business.

In the near future, the story unfolds into RPA bots and insecure semi-autonomous/autonomous agents connected to critical data sources with powerful automation capabilities, one “bad” LLM inference away from bringing down production systems.

The solution isn’t a tactical approach to “hardening” Agents or securing an MCP server. Although I find the nitty gritty fascinating.

Instead the solutions requires an enterprise security strategy built around building an inventory of SaaS and Desktop apps, measure adoption across business roles, mapping usage patterns to risk to business processes all overlayed with security capabilities that frankly, don’t even exist yet.

Without this view of the broader landscape, we’ll be playing catch-up while the adversary’s time to attack will decrease using AI tools the enterprise attack surface expands and the business’s ability to mitigate cyber risk falls behind.

Enterprise Security AI Threat Landscape

Role-by-role connection

| Business Roles | Typical AI Usage | Data & system access | Most likely threat mechanics |

|---|---|---|---|

| Sales Teams | Email/doc copilots; AI sidebars; CRM agents | CRM PII, emails, calendars | Indirect prompt injection via links/DOM → send-email, calendar invites, drive access |

| Marketing Manager | Content copilots; sidebars; social connectors | Brand accounts, CMS/DAM | URL/file injections → unwanted posts or data pull via connectors |

| Customer Support Agent | Copilot in ticketing; RPA for updates | Tickets (PII), CRM | Malicious customer content → tool abuse (account notes/updates; exfil via paste/base64) |

| Software Engineer | Code assistant; repo bots | Repos, CI, secrets | Insecure code patterns; dependency/supply-chain issues |

| SRE/DevOps | Copilots; workflow agents | Infra consoles/pipelines | Agent over-reach (run scripts, change configs) |

| Data Scientist/Analyst | Notebook copilots; vector DBs | Warehouses; telemetry | Data leakage via prompts/logs/embeddings |

| HR Generalist / Recruiter | Doc/email copilots | Employee PII; offers | PII in chat logs; cross-border retention |

| Payroll Specialist | RPA + copilots | Payroll/SSN/bank | RPA task misuse; credential reuse |

| Finance Analyst | Copilots; RPA in ERP | Forecasts/ERP | Model-assisted exfil (email/export) |

| Legal Counsel | Limited copilots (summaries) | Privileged docs/DMS | Retention or 3P processing of privileged comms |

| Executive Assistant | Copilots; calendar/email agents | Exec calendars/contacts | Agent sends from exec identity; invite scraping |

Threat Sources: https://owasp.org/www-project-top-10-for-large-language-model-applications

Adoption By THe Numbers

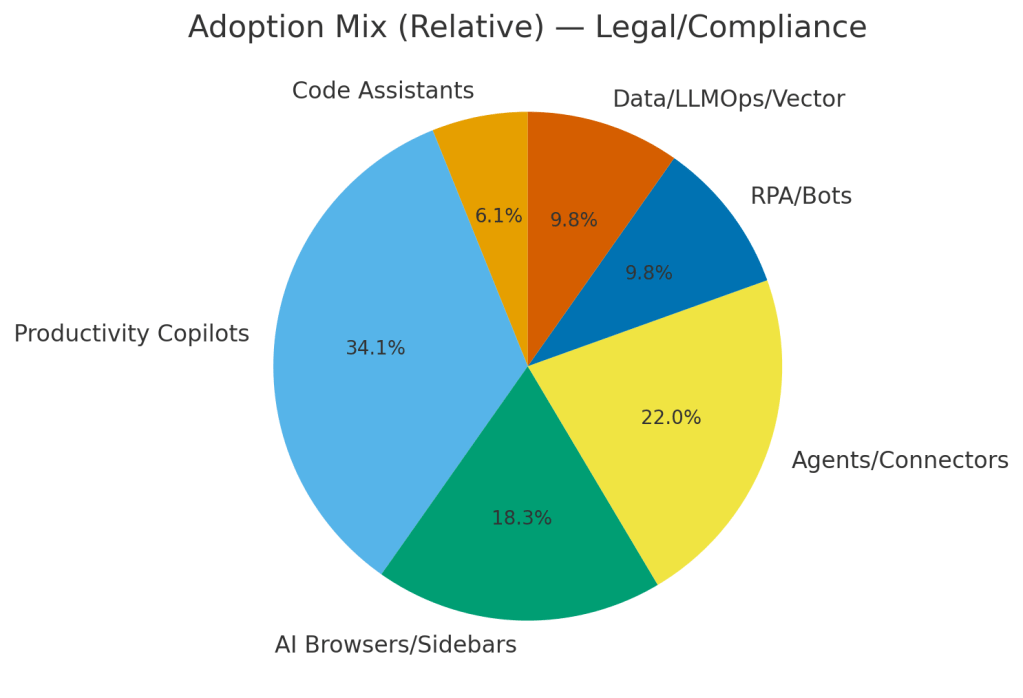

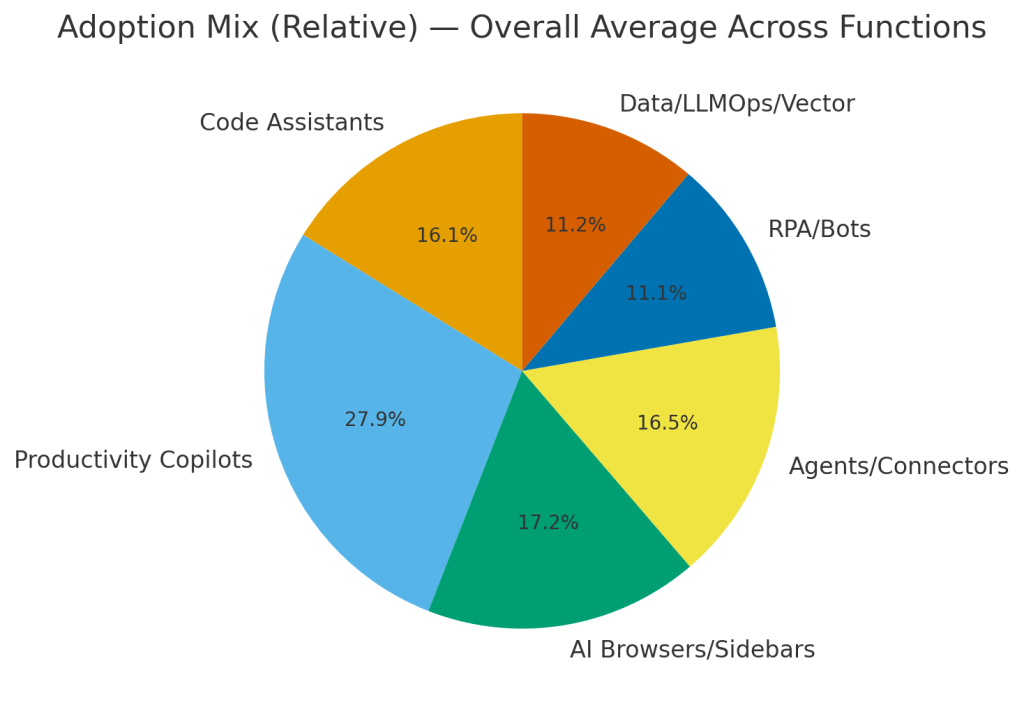

Marketing & Sales are trending heavy copilots + AI browsers/sidebars over mail/docs/CRM. The biggest risks are prompt-injection, connector-based data exfil and accidental sharing.

Service/Customer Ops leaning towards Agents/connectors + RPA touching tickets/KB/calls so watch for tool-abuse, over-broad scopes and malicious customer content driving unintended AI actions.

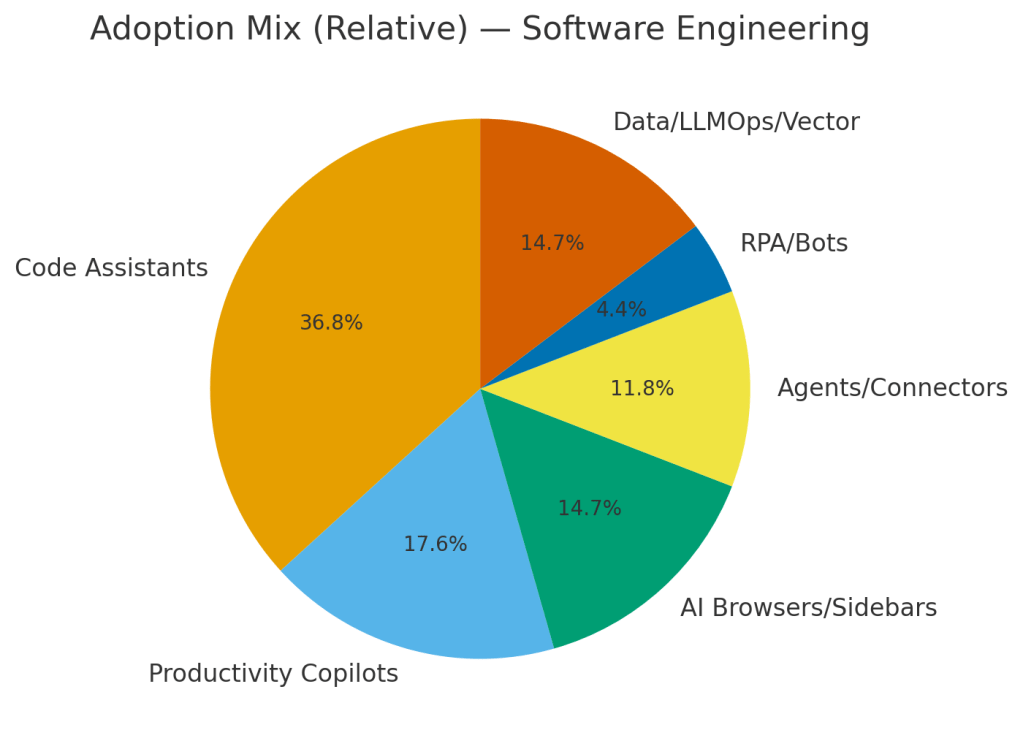

Software Engineering is dominated by code assistants which present supply-chain exposure, secrets in conversation chats, insecure agent permissions, broad RAG data access and eventually overly broad and dangerous administration capability.

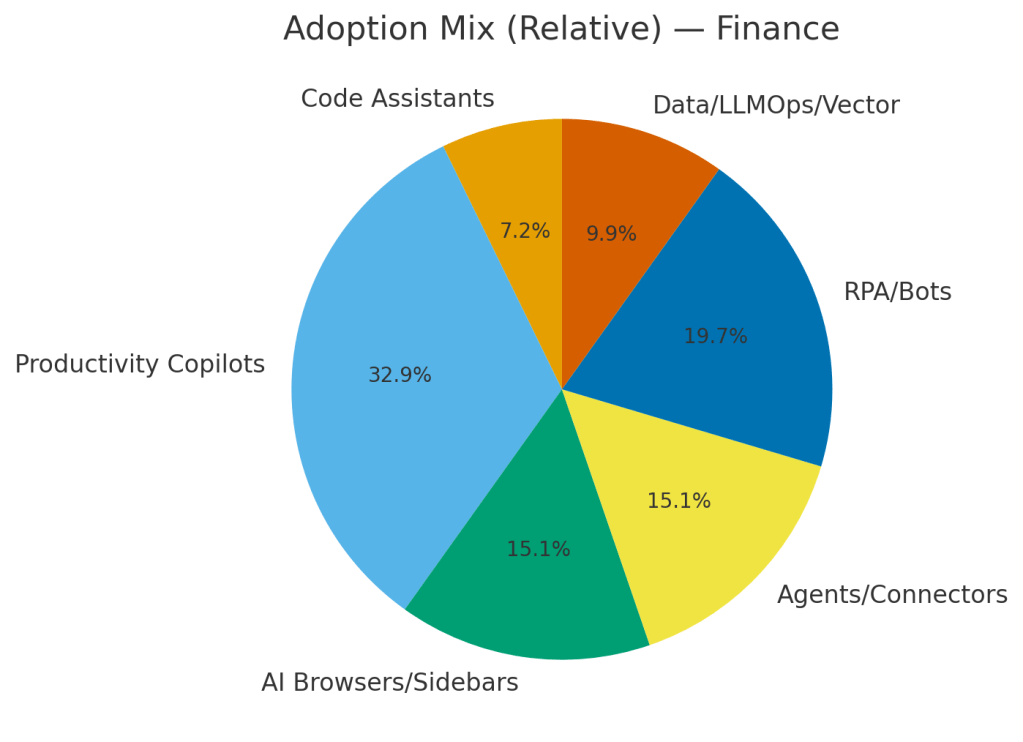

Finance / HR / Legal are slower adopters but handle crown-jewel data creating retention/compliance risk, cross-doc leakage.

Data Sources:

- Microsoft Work Trend Index 2025, Published Apr 23, 2025.

- McKinsey State of AI 2025, Published Mar 2025.

- Stack Overflow Developer Survey 2025 , Published 2025.

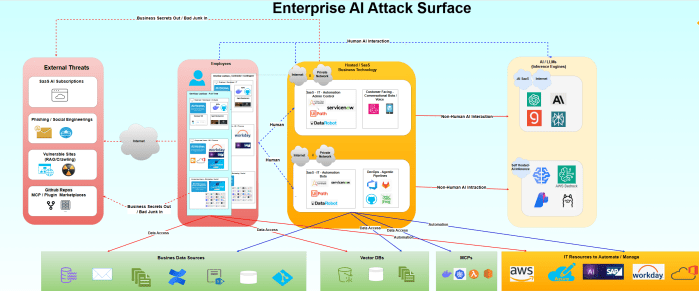

cyber security threats

10,000 foot view ….. humans are at the center driving consumption, while heterogeneous AI solutions propagate across our Desktops, DevOps Tooling, HR Tooling and Creative suites seemingly amplifying the problem.

| Threat source | Primary Vectors | Who/what gets hit first | Why it succeeds |

|---|---|---|---|

| Unwitting employees (any BU) | Remote/indirect prompt injection via URLs, docs, chat transcripts; copy-paste of sensitive data into chats; enabling over-permitted connectors | Marketing/Sales, Service Ops, Exec Assistants (high external content + agent actions) | “Cool/easy” bias; default-on memory; confirm-to-act disabled |

| Contractors & temp staff | Use of personal/free plans; unmanaged devices; side-loaded plugins | Content teams, SDRs, external CS agents | No SSO/SCIM; data retention unknown; cross-tenant data. no workstation control, no managed AI |

| External internet actors (opportunistic & targeted) | Malicious pages/links/images that carry hidden prompts; SEO poisoning; drive-by injection to AI sidebars; phishing 2.0 with agent-aware lures | Anyone with agentic browsers/ sidebars | No credential theft needed—agent already has access |

| State-aligned/APT | Long-game: poisoning docs/KBs; supply chain (plugins/RPA packs); model inversion research | Engineering, vendor ecosystems | Patience + resources + upstream supply chain leverage + reliance on AI w/o human intervention |

| Supply-chain partners (SaaS, plugins, RPA marketplace) | Over-broad OAuth scopes; weak isolation; telemetry co-mingling data sources; weak retention | Agents/Connectors across all BUs | “Trusted” connectors bypass scrutiny Weak provenance on MCP, Agent plugins and marketplaces |

| AI-assisted developers (internal & contractor) | Poor coding amplified by code assistants; insecure patterns spread quickly; vulnerable agent actions | Engineering, internal tooling | Demand for mor with less; insecure practices propagate, insecure multi agent dev workstations |

AI “Kill Chain” in a “Nutshell”

- Hostile content placed in web pages, docs, tickets, PDFs, images (or seeded in plugins/marketplaces).

- User/agent loads content; sidebar/agent ingests hidden instructions.

- Model interprets prompts; tool call chosen (email send, file write, HTTP POST).

- Execute: connector with over-broad system settings, mcp access, oauth scopes does the damage (exfil, command, post).

- Persist: data theft, mcp CRUD

Indicators to watch

- Spike in agent egress to unapproved AI domains

- Spike in install of Claude Code, Codex, Atlas GPT

- Spike in federated logins to AI SaaS solutions

- Spike in Bytes out to AI SaaS providers

- Growth in connector scopes (write/admin) vs. read-only

- Growth in MCP adoption and development

- Rise in AI generated PRs

- Growth in AI repo and marketplace access from new/unvetted plugins in use; expired signatures; missing SBOM.

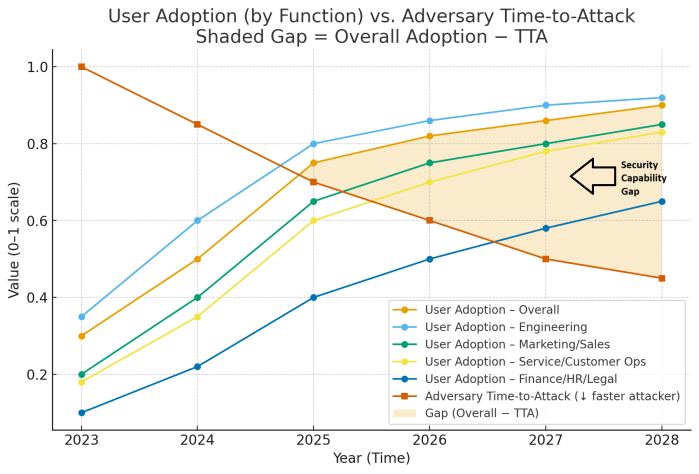

Widening AI Security Gap

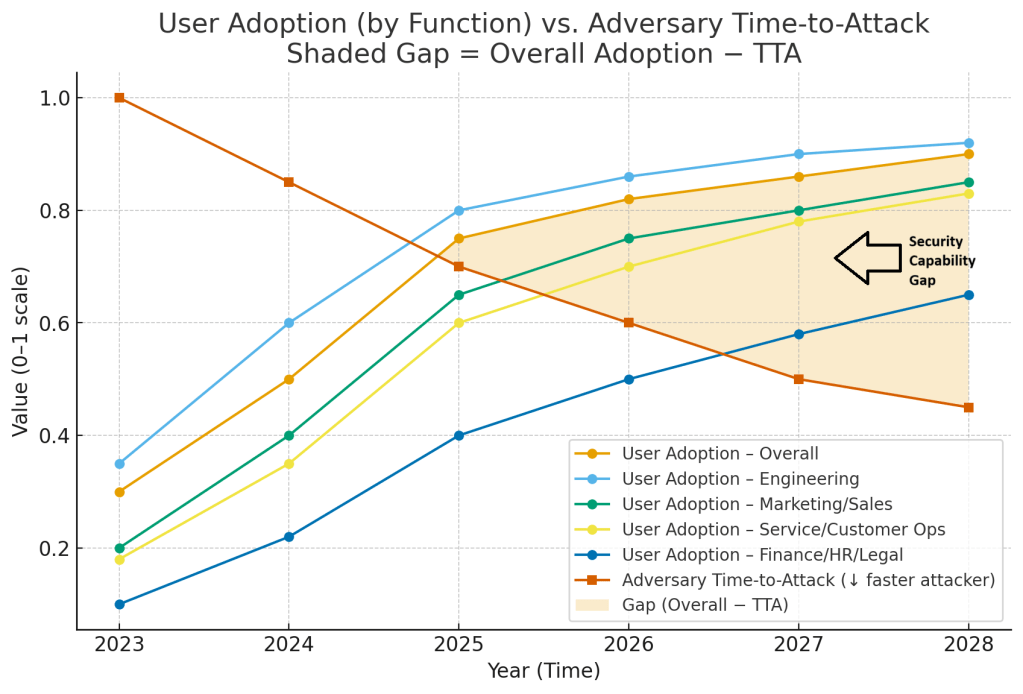

Adoption vs. Adversary: A Simple Way to See Your AI Risk Gap

What am I trying to answer with this Model?

Two things:

- How fast are people adopting AI (overall and by team)?

- Are attackers getting faster at building and running attacks because of AI?

When you put those on the same chart, the space between the two lines is my possible risk gap. That shaded area shows where adoption might be outlining your security readiness to address emerging AI threats. Ideally, we’d be able to improve the model by plotting the Security’s team adoption of AI against employee adoption and attacker adoption.

Legend

- Blue line: Employee AI adoption over time. I draw one overall line and a few by function (Engineering, Marketing/Sales, Service/Customer Ops, Finance/HR/Legal).

- Orange line: “Time-to-attack” proxy placeholder for how quickly a bad actor can go from idea to a working attack. As attackers use AI to write code, craft lures or tweak exploits that time tends to decrease.

I used a few reputable studies as anchors for the model to try and base my approach in some world world data points.

Adoption (blue line) anchors

- Microsoft Work Trend Index 2025

- McKinsey State of AI 2025

- Stack Overflow Developer Survey 2025

Adversary (orange line) anchors

- GitHub Copilot research

- BCG GenAI experiments

- Mandiant M-Trends 2025

- Microsoft Digital Defense Report 2025

practical USE?

Healthy gap? Keep investing in Governance, agent hardening, detections, NHI for agentic solutions and red-teaming for indirect prompt injection.

Consider replacing my public anchors with your telemetry

- Pull monthly active users by tool class and team from SSO/SCIM, vendor admin logs and CASB.

- Normalize to 0–1 (users ÷ headcount) and plot your own lines

Create your own attacker-speed proxy

- Use incident metrics (time from first lure, data staging), patch-to-exploit lag, and red-team cycle time.

Read the gap and act.

What Can YOU do?

If there’s one lesson here, it’s that AI security risks are a problem to be managed, not a feature to be toggled.

Adoption is racing ahead unevenly across the business while attacker cycle times shrink. The only way to see the forest—and not get lost in the AI trees is to run AI like any other program that needs to be managed.

What are some of my take-aways?

- Name an owner (AI Platform + Security)

- Measure how big the risk is and who’s creating it in the business

- Prioritize by impact where AI touches customers and product

- Set non-negotiables in procurement (SSO/SCIM, RBAC, SIEM integration, retention, guard rails) so adoption doesn’t outrun control.

- Set standards around agent hosting and agent isolation, agent hardening, agent access and SIEM integration

- Measure Security adoptions vs. attackers speed and adoption

- Publish a simple scorecard monthly and fund the gaps (Agentic IAM, NHI monitoring, guard rail solutions, prompt injection test suites)